Nvidia (NVDA) stood out as truly newsworthy this week at its GTC Meeting when it declared it’s structure its absolute first independent central processor. For an organization that made its fortune on the influence of its designs cards, it’s an absolutely new course.

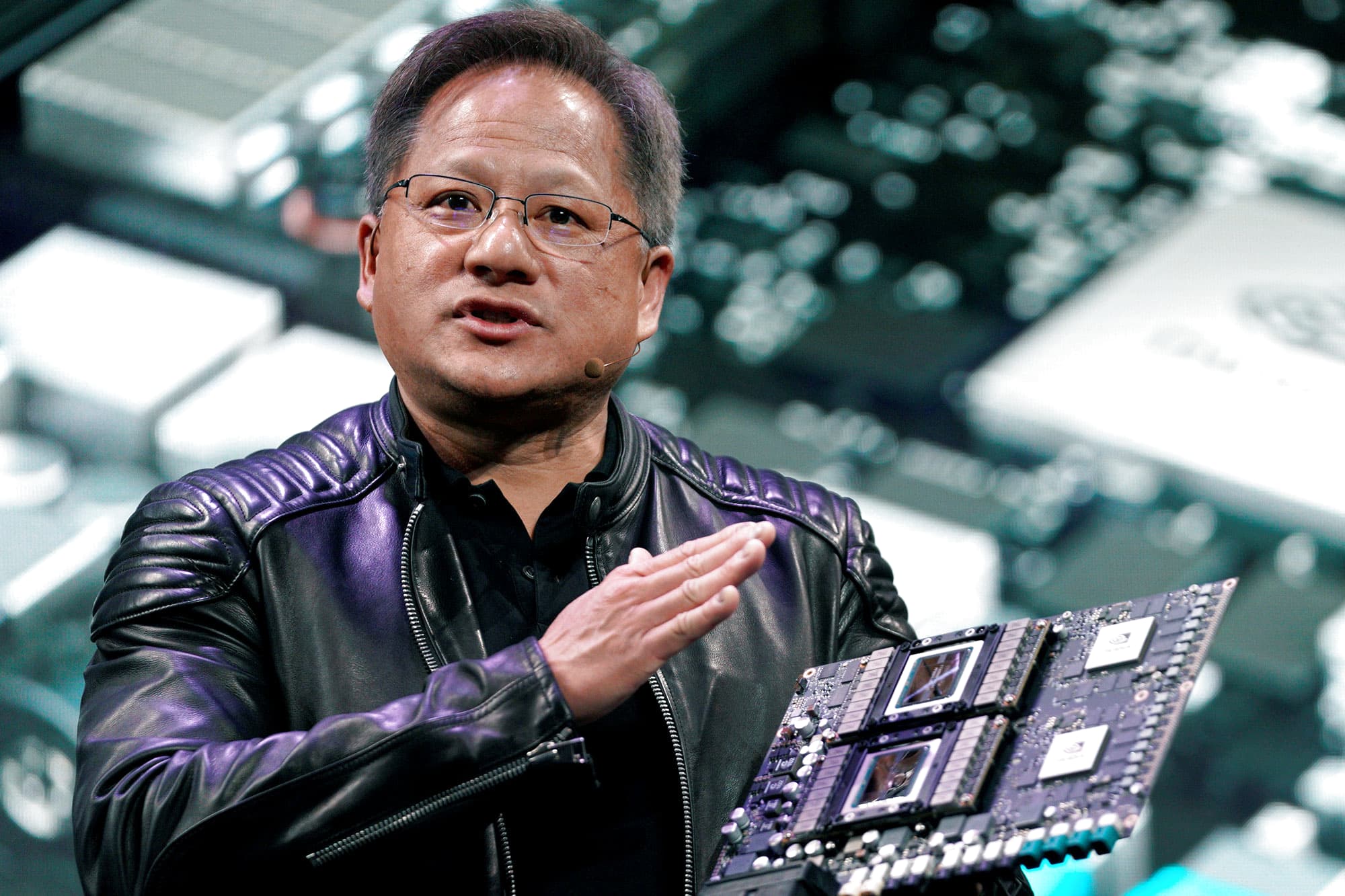

What’s more, as per President Jensen Huang, the superchip, called Elegance, is a strong expansion to the organization’s setup.

“This is another development market for us,” Huang told Hurray Money during a meeting.

“The whole server farm, whether it’s for logical processing, or for man-made reasoning preparation, or derivation to the application, the sending of computer based intelligence, or server farms at the edge, completely out to an independent framework, similar to a self-driving vehicle, we have server farm scale items and innovations for every one of them,” he added.

Beauty, named for PC programming pioneer Elegance Container, highlights 144 centers and two times the memory transmission capacity and energy productivity of very good quality driving server chips, as per Nvidia.

The chip, which Nvidia calls a superchip on the grounds that it’s two computer processors in one, is explicitly intended for use in artificial intelligence frameworks, something the organization has put vigorously in all through late years.

“Interestingly, we’re selling computer chips. Today, we associate our GPUs to accessible computer processors on the lookout, and we’ll keep on doing that. The market is huge — there are a variety of fragments,” Huang said.

“Man-made consciousness or logical registering, how much information that we need to move around is to such an extent. So this offers us the chance to offer a progressive kind of item to a current commercial center for another kind of use that is truly clearing software engineering.”

Notwithstanding Beauty, Nvidia revealed its new Container H100 server farm GPU. That framework, which packs 80 billion semiconductors, offers a huge move forward in execution contrasted with its ancestor, the A100 GPU, Nvidia said.

GPUs are significant for elite execution registering and man-made intelligence applications since they can deal with numerous cycles simultaneously. Furthermore, Nvidia has used those capacities for a really long time.

“In the event that you ponder our organization today, it’s actually a server farm scale organization. We offer GPUs and frameworks and programming and systems administration switches,” Huang made sense of.

“Thus the whole server farm, whether it’s for logical processing, or for man-made consciousness, preparing, or surmising to the application, the sending of computer based intelligence, or server farms at the edge, or completely out to an independent framework, similar to a self-driving vehicle, we have server farm scale items and innovations for every one of them.”

However, as chips proceed to contract and the quantity of semiconductors pressed onto every central processor or GPU builds, there’s dependably whether or not chip creators like Nvidia are facing the constraints of the silicon that makes up their semiconductors.

Huang, nonetheless, says that is not the situation, that chip creators actually have a lot of time thanks to the force of distributed computing.

“It is totally the situation that semiconductor scaling is easing back. We’re getting more semiconductors, yet the … speed of advance has eased back massively,” Huang made sense of.

“In the cloud, you can make PCs as large as you like. What’s more, truth be told, assuming you take a gander at the PC that we reported today, it has staggering size. For instance, 80 billion semiconductors, we have eight of those chips in a single framework. And afterward we take 32 of those frameworks, and we set up it into one monster GPU, they work like one goliath GPU.”

Nvidia, obviously, isn’t the main organization offering server farm GPUs to clients intrigued by artificial intelligence and superior execution processing. AMD (AMD) sells its own GPU-controlled arrangement that it cases can undoubtedly take on Nvidia’s earlier age server farm GPU the A100.

Nvidia, in the mean time, says the Container GPU will pass the entryways over of the A100. Presently we simply have to figure out how it piles up to AMD’s contributions.

Global Ladies’ Day Nasdaq President Adena Friedman on propelling ladies to the C-suite

Global Ladies’ Day Nasdaq President Adena Friedman on propelling ladies to the C-suite  Why Dallas Ranchers Lobby of Notoriety QB Troy Aikman is taking on Enormous Brew

Why Dallas Ranchers Lobby of Notoriety QB Troy Aikman is taking on Enormous Brew  Young ladies Who Code pioneer ‘Working environments have never been worked for ladies’

Young ladies Who Code pioneer ‘Working environments have never been worked for ladies’  Robinhood dispatches check card that allows shoppers to utilize loose coinage to contribute

Robinhood dispatches check card that allows shoppers to utilize loose coinage to contribute  Why Nvidia’s Chief sees auto chips and tech as the organization’s next huge business

Why Nvidia’s Chief sees auto chips and tech as the organization’s next huge business  Contributing legend Bill Gross AMC and GameStop stocks resemble lottery tickets

Contributing legend Bill Gross AMC and GameStop stocks resemble lottery tickets